In the ever-evolving landscape of technology, few innovations have generated as much excitement—and as many questions—as quantum computing. While traditional computing has been the backbone of technological progress for decades, quantum computing represents a paradigm shift, promising to solve problems that are currently intractable for classical systems. But what exactly sets quantum computing apart from its classical counterpart? In this deep dive, we’ll explore the fundamental differences between quantum and traditional computing, shedding light on the science, mechanics, and potential implications of this groundbreaking field.

The Foundation: Bits vs. Qubits

At the heart of any computer lies its basic unit of information. For traditional computers, this is the bit, which can exist in one of two states: 0 or 1. All computations, no matter how complex, are ultimately reduced to operations involving these binary values. This simplicity has been the cornerstone of modern computing, enabling everything from word processing to artificial intelligence.

In contrast, quantum computers use qubits (quantum bits) as their fundamental unit of information. Unlike classical bits, qubits leverage the principles of quantum mechanics to exist in multiple states simultaneously—a phenomenon known as superposition. Additionally, qubits can be entangled with one another, meaning the state of one qubit is intrinsically linked to the state of another, regardless of distance. These properties allow quantum computers to process vast amounts of data in parallel, offering computational power that far exceeds what classical systems can achieve.

For an accessible introduction to quantum principles, visit IBM’s Quantum Computing Resources.

Key Differences Between Quantum and Traditional Computing

1. Processing Power: Exponential vs. Linear Scaling

Traditional computers rely on sequential processing, where tasks are broken down into smaller steps and executed one after another. Even with advancements like multi-core processors, classical systems scale linearly, meaning their performance improves incrementally as more resources are added.

Quantum computers, however, operate exponentially. Thanks to superposition and entanglement, a quantum system with n qubits can represent (2^n) states simultaneously. For example, a 300-qubit quantum computer could theoretically perform more calculations at once than there are atoms in the observable universe—a feat unimaginable for even the most powerful supercomputers today.

This exponential scaling makes quantum computing ideal for tackling problems in cryptography, optimization, and molecular simulation, where the complexity grows rapidly with input size.

2. Deterministic vs. Probabilistic Outcomes

Classical computers provide deterministic results; given the same input, they will always produce the same output. This reliability is crucial for applications like banking, engineering, and communications.

Quantum computers, on the other hand, operate probabilistically due to the inherent uncertainty of quantum mechanics. Instead of providing a single definitive answer, a quantum computation yields a probability distribution of possible outcomes. To extract meaningful results, algorithms must be designed to amplify the likelihood of correct answers while suppressing incorrect ones. Techniques like quantum error correction and amplitude amplification play critical roles in ensuring accuracy.

For insights into quantum error correction, refer to this article by Nature Physics.

3. Algorithms: From Boolean Logic to Quantum Gates

Classical computers execute instructions using Boolean logic gates (AND, OR, NOT), which manipulate bits through predefined operations. These gates form the basis of all software and hardware interactions in traditional computing.

Quantum computers employ quantum gates, which manipulate qubits through operations described by matrices in linear algebra. Common quantum gates include the Hadamard gate (used to create superpositions), the CNOT gate (for entangling qubits), and the Pauli gates (for rotations). These gates enable quantum circuits to perform tasks like factoring large numbers (via Shor’s algorithm) or searching unsorted databases (via Grover’s algorithm) exponentially faster than classical methods.

To learn more about quantum algorithms, explore Microsoft’s Quantum Development Kit.

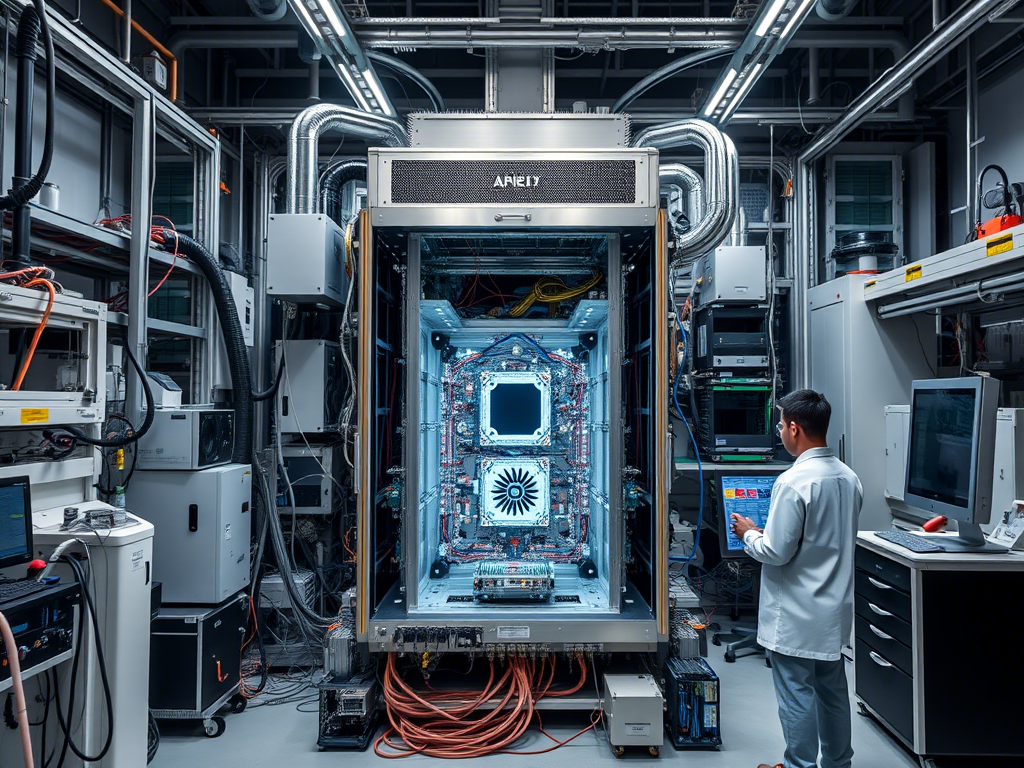

4. Hardware Architecture: Silicon Chips vs. Exotic Materials

The architecture of traditional computers is built around silicon-based transistors, which switch between 0 and 1 to perform calculations. Over decades, miniaturization has allowed billions of transistors to fit onto a single chip, driving Moore’s Law and exponential growth in computational power.

Quantum computers, however, require entirely different materials and architectures to maintain coherence—the fragile state required for qubits to function correctly. Current quantum hardware relies on technologies like superconducting circuits, trapped ions, photonic systems, and topological qubits. Each approach comes with its own advantages and challenges, but all share the goal of minimizing noise and decoherence, which can disrupt quantum states.

For a technical overview of quantum hardware, see Google Quantum AI’s research page.

Real-World Implications: Where Quantum Excels

While quantum computing is still in its infancy, its potential applications are already clear. Here are some areas where quantum systems are expected to outperform classical ones:

1. Cryptography

Quantum computers pose both a threat and an opportunity for encryption. Algorithms like RSA rely on the difficulty of factoring large numbers, a task that quantum computers can solve efficiently using Shor’s algorithm. Conversely, quantum key distribution (QKD) offers theoretically unbreakable encryption based on the laws of physics.

2. Optimization Problems

Industries ranging from logistics to finance face complex optimization challenges that are computationally expensive for classical systems. Quantum annealing and hybrid quantum-classical approaches promise to revolutionize supply chain management, portfolio optimization, and machine learning.

3. Drug Discovery and Material Science

Simulating molecular structures is notoriously difficult for classical computers due to the exponential growth of variables involved. Quantum computers can model chemical reactions and interactions at the quantum level, accelerating breakthroughs in drug discovery and material design.

4. Artificial Intelligence

Quantum-enhanced machine learning algorithms could dramatically improve pattern recognition, natural language processing, and generative models, unlocking new possibilities in AI development.

For real-world case studies, visit D-Wave Systems’ application showcase.

Challenges Facing Quantum Computing

Despite its immense promise, quantum computing faces significant hurdles before it becomes mainstream:

- Error Rates and Decoherence: Maintaining stable qubits long enough to complete computations remains a major challenge.

- Scalability: Building large-scale quantum processors without introducing excessive noise is a formidable engineering task.

- Cost and Accessibility: Quantum hardware is prohibitively expensive, limiting access to researchers and enterprises with substantial funding.

- Integration with Classical Systems: Most practical solutions will likely involve hybrid quantum-classical workflows, requiring seamless integration between the two paradigms.

For a balanced perspective on these challenges, read this analysis by MIT Technology Review.

Final Thoughts: A New Era of Computation

Quantum computing isn’t just an incremental improvement over traditional computing—it’s a fundamentally different way of thinking about information processing. By harnessing the bizarre yet beautiful rules of quantum mechanics, we stand on the brink of solving problems that were once thought impossible.

However, it’s important to temper expectations. While quantum supremacy—the point at which a quantum computer outperforms the best classical supercomputer—has been demonstrated in specific cases, widespread adoption is still years away. Nonetheless, the progress made so far underscores the transformative potential of quantum technologies.

As researchers continue to push the boundaries of what’s possible, staying informed about developments in quantum computing is essential for anyone interested in the future of technology. Whether you’re a scientist, engineer, or curious enthusiast, now is the perfect time to dive into this fascinating field. For ongoing updates, follow trusted sources like Quantum Computing Report and Quanta Magazine.